Changelog

Mar 2, 2026

Let agents manage tags, Structured Q&A analysis, Modes are now enforced in chat, Attachments persist across modes, Focus narrowing is now an explicit step, “Research and cluster” mode is renamed to “Clusters”, Smarter PII redaction, Settings UX refinements

AI tag descriptions are now default on (opt-out)

Teams rely on Tags to narrow data and their focus in chat. To help our stack perform, tags now get an AI-generated description by default, with a clear opt-out if you prefer to manage descriptions yourself. This improves tag quality from day one and makes Focus behavior in chat more reliable. A backfill tool can generate missing descriptions for existing Tags.

Structured Q&A analysis (Beta)

Teams running structured interviews want Highlights that follow their interview guide, but generic extraction can lose the question-by-question structure. We added a new capabilities that detects Q&A blocks and turns them into Highlights that preserve the original format. This makes research outputs easier to review and analyze by question. The feature is currently in beta.

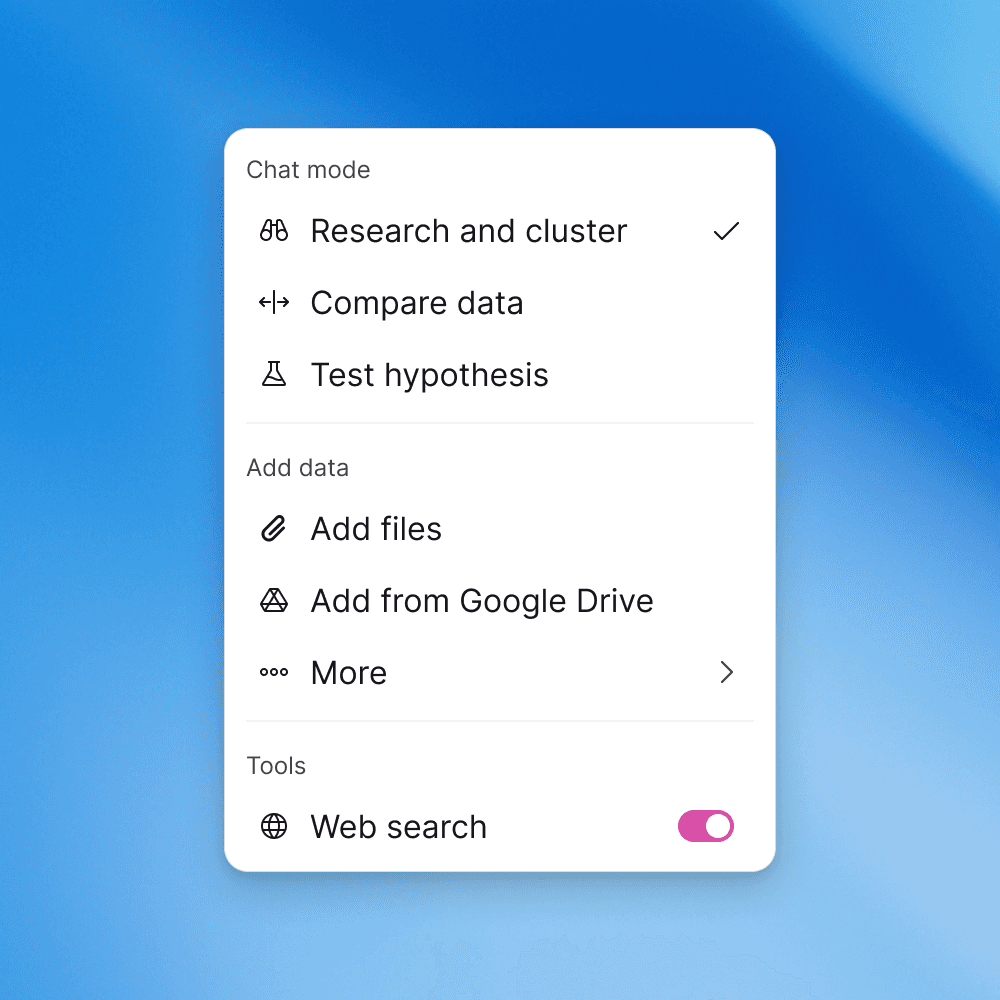

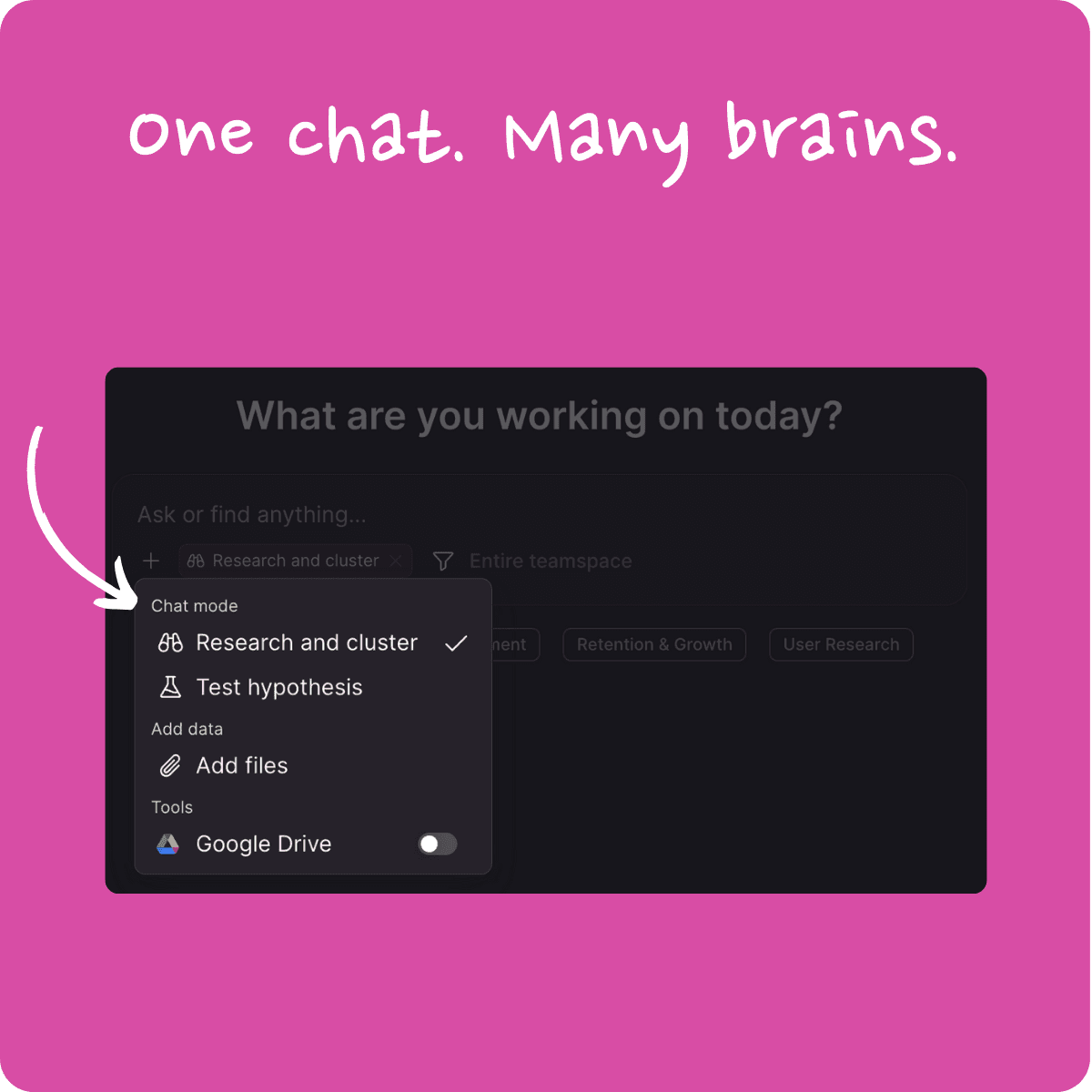

Modes are now enforced in chat

Users choose Modes to get a specific outcome. Now, when you select a Mode (Hypothesis, Clusters, Podcast, Presentation…), the AI agent will run that mode’s core workflow before responding. This makes results more predictable and ensures the outputs match the Modes you picked.

Attachments persist across modes

Uploaded files and selected sources are now sticky in chat, even if you change Modes in the same thread. Multi-message chats remain grounded in the exact same input data.

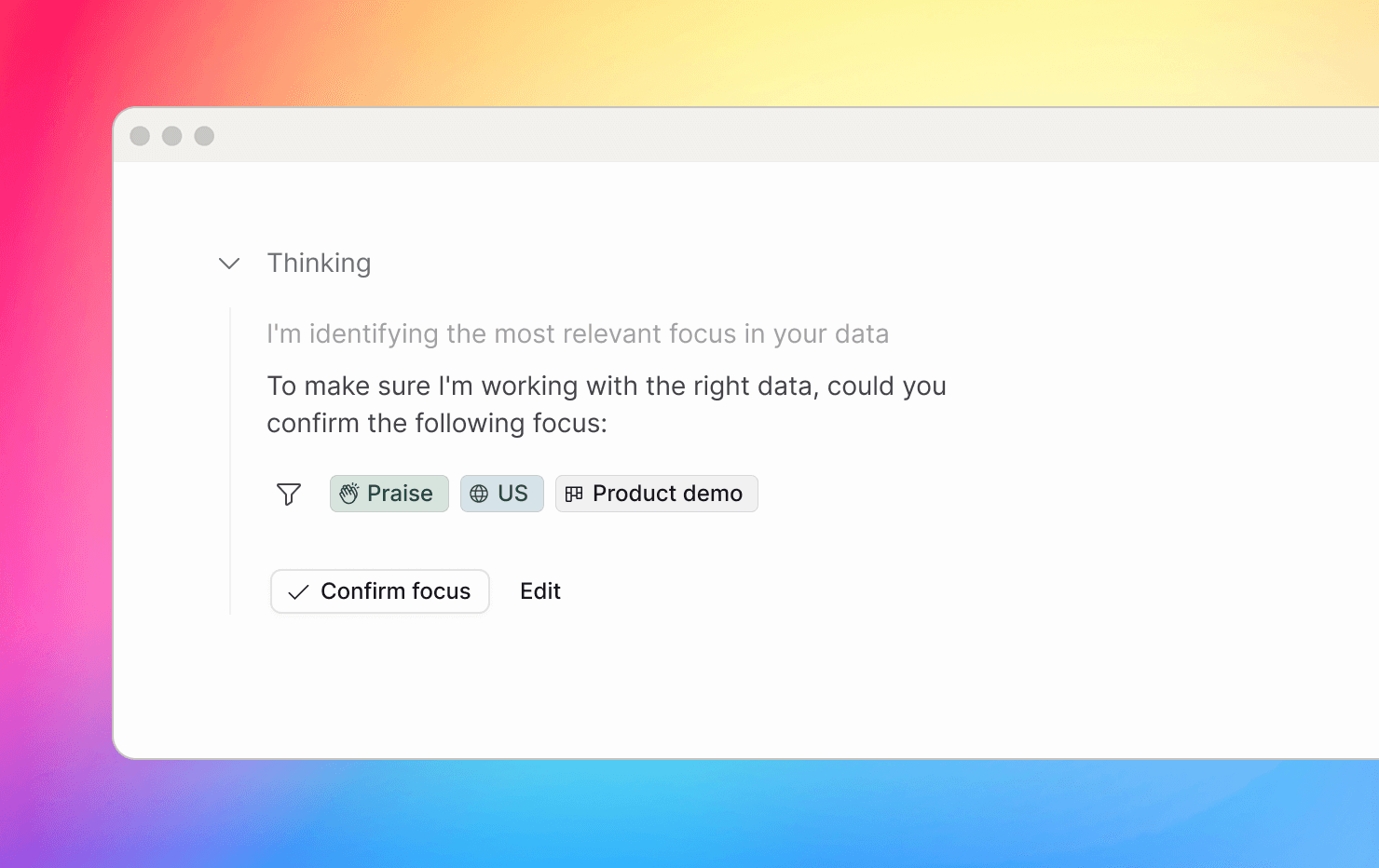

Focus narrowing is now an explicit step

We've upgraded the chat to now runs a dedicated propose_focus_change step before retrieving data, and that step is visible in both streaming and saved history — visible in Thinking. This makes it clear when Focus was set and why, so teams clearly see the data the AI agent is using to produce responses.

“Research and cluster” mode is renamed to “Clusters”

Users see “clusters” in the product and already use that language in conversation. We renamed the “Research and cluster” mode to “Clusters” to keep terminology consistent and reduce cognitive load.

Smarter PII redaction

We upgraded PII redaction models to better handle context and multilingual input, improving readability while still protecting sensitive information. As before, sensitive data is removed before anything is sent to external AI services.

Settings UX refinements

Creating a Job now opens it directly, Settings table selections are deep-linkable, tag creation respects the active group filter, group editing keeps your place, and teamspace switching shows clear loading states.

Changelog

Feb 23, 2026

Chat now frames results by focus size, Manage roles per teamspace, Faster AI tuning in playground, New transcription models, Bulk-edit Highlights in one go, improved REST API & Developer docs, Clearer search bar experience, and better error handling in chat

Bulk edit Highlight metadata

Teams often need to keep highlight metadata consistent across large datasets, but updating fields like accounts or source used to be slow and manual. You can now select multiple Highlights and apply metadata updates in one step. This makes it practical to clean up historical data, keep properties aligned as datasets grow, and maintain reliable structure for downstream analysis.

Teamspace roles & permissions

Organizations want different access levels by Teamspace, but roles were previously harder to manage without over-permissioning. Roles can now be managed at the Teamspace level, so a user can be an admin in one Teamspace and read-only or guest in another. This gives tighter control and prevents granting broader access than needed.

Chat can reflect on its Focus

Chat answers can feel overly definitive — meaning the answer can read like a general truth about the whole dataset — even when it’s actually based on a smaller set of data. Chat now has structured awareness of the size and composition of the dataset in Focus, so it can frame Clusters and insights relative to the broader dataset. This helps teams judge signal strength, reduces over-interpretation of small samples, and makes analysis more transparent.

AI suitability evaluation in simulations

Tuning highlight generation, tagging, or clustering often requires expert judgment and trial-and-error. We added an AI suitability evaluator that scores whether generated Highlights are structured and complete enough for downstream AI workflows and explains what passed or failed inside simulations. This makes it faster to iterate toward best-practice setups for retrieval, automation, and agentic use cases.

Transcription models upgraded

Accurate transcripts are critical for voice-based data (like Recordings), especially in multilingual environments. Our transcription pipeline now runs on a new generation of speech models with improved accuracy and better language support. This leads to clearer transcripts, stronger Highlights, and more reliable downstream AI analysis.

Redesigned developer portal with quickstart

Get started with our API like never before. The developer portal was redesigned to offer a clearer structure with dedicated explanatory pages, and a proper quickstart tutorial. Tutorials are backed by automated daily tests to keep docs in sync, resulting in faster onboarding and more reliable integration guidance.

REST API docs improved

Building integrations is easier when docs explain intent, not just schemas. Our REST API docs now focus on when to call an endpoint, what happens behind the scenes, and what to expect immediately versus asynchronously—especially for AI-driven workflows. This makes integrations easier to implement and maintain.

Always show dates

When items happened “today” we previously showed only the time, which made it easy to lose temporal context in lists. We now always show the date (including for today), so it’s immediately clear when something happened without relying on surrounding context.

Soft error handling for tools in chat

When a tool like Podcast generation fails, chat now notifies you and falls back to text instead of failing silently.

Search editor simplified for ease of use

Floating and Fixed search now behave consistently: the “X” is gone, Clear is the only reset, and Floating search applies changes only on Continue.

Changelog

Feb 16, 2026

Podcast mode is here, Web search become a mode, Skills help you steer chat, CSV export from chat, and translate highlights

Podcast mode is here

Everyone loves a good podcast, so do we! With Podcast mode, you can now generate a natural-sounding audio episode in chat from your question and findings, designed to be easy to listen to and easy to pass along. Choose a format (summary, deep dive, critique, debate) and a voice style, then share the audio so teammates can catchup on insights on the go.

Web search becomes a mode

Web search previously could trigger even when it wasn’t explicitly enabled, which created confusion about where answers came from. Web search is now strictly opt-in: it only runs when you select it as a Mode. This makes data boundaries clear and keeps chat grounded in your internal feedback unless you deliberately bring in external context.

Skills help you steer chat

The old “Procedures” concept no longer matched how teams think about guiding AI behavior. We renamed Procedures to Skills and shifted the experience to a simpler free-text model, so it’s easier to describe what the AI should do without navigating structured steps. This reduces configuration friction and makes AI behavior easier to understand, maintain, and scale as workflows become more agent-driven.

CSV export in chat

Teams often want to take structured outputs from chat into spreadsheets for deduplication, reporting, and sharing. We added a CSV export tool that lets you download tables generated in chat and continue in Excel. The export uses a reliable backend flow (structured data in → CSV generated → secure upload → download link out) instead of sandbox-style file generation. The result is a simpler, safer export experience for spreadsheet-heavy workflows.

Translate highlight quotes

Highlights are most useful when teams can read the original customer quote, but language barriers slow analysis in global orgs. You can now translate highlight quotes directly in the detail dialog with one click. Switch between the translated version and the original text anytime. This keeps the authenticity of the quote while making insights instantly accessible to international teams.

Refinements to chat redesign

We shipped a set of UI refinements in chat to improve clarity and consistency, including better responsiveness, visible Focus in the header, and clearer navigation and panel controls. We also fixed scrollbar alignment across navigation and chat.

Changelog

Feb 9, 2026

Playground to simulate jobs/agents, Timeline graphs in chat, Full context in chats, Follow along chat's thinking, Settings gets a revamp, Easy-access to highlight source, and Easier date filtering in highlights

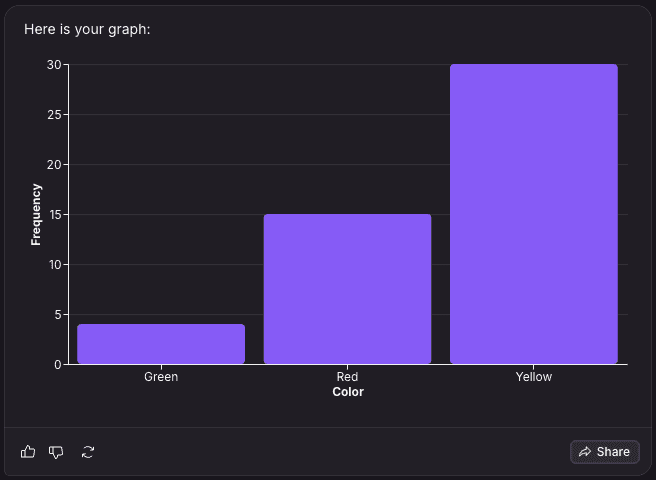

Timeline graphs in chat

Analytics in chat now include timeline graphs. This makes it easy to spot recent momentum, identify spikes in feedback, and connect changes to launches or external events.

AI Simulations for jobs/agents

We're introducing a persistent simulation and evaluation workflow for your jobs/agents. You can now run end-to-end simulations on your data pipeline with real data, see how highlights would look after import, save test cases, rerun them over time, define success criteria (including LLM-based evaluation), and setup auto-monitoring to flags what passed or failed—and why. You can expect higher coverage of your data, systematic quality checks, and a test-driven way to optimize your setup as your data, system configurations, and AI models evolve.

Easy-access to highlight source

When you’re working across multiple surveys, integrations, and ingestion pipelines, knowing where a highlight came from is essential. The highlight detail dialog now shows the originating source system alongside existing context like recordings, tags, and timestamps.

Easier date filtering in highlights

Filtering highlights by time no longer requires advanced search syntax. Search now supports intuitive date filtering to let you narrow down to specific months, custom ranges, or relative periods like “last quarter”. Just type date: and enter a range (e.g. date:December 2025).

Follow along chat's thinking

We improved the way chat's surfaces its thinking process to help you follow along and better understand the chat's reasoning. Now, you can see how the thinking steps build on each other—helping with trust in the answers.

Chats now show their full context (not just the focus)

Chats used to show only the data focus they were running on—not the underlying data sources or modes. We've changed that and in addition to the data focus, chat's now show:

the selected mode — including tools (e.g. web search)

data sources

and, any attached files

You can now see the full context the AI is operating on in chat.

System settings gets a revamp

Settings is now a full-page experience with dedicated navigation and more space to work. Teams have shown visible appetite to configure and take control of the AI in the system. This new layout gives us the space to present richer functionality as the product grows.

Changelog

Feb 1, 2026

Better planning in chat, Streamlined navigation, Redesigned setting dialog, Improved data focus experience in chat, Search in AI Jobs, Deep links and timestamps for highlights

Plan-first in chat for better multi-step analysis

Great analysis starts with understanding what you’re actually trying to learn. This week, we’re introducing a plan-first flow in chat that helps AI get it right from the start. Think of it like a quick planning session with an insights lead before diving into the data. In multi-step workflows (data scoping → clustering → quantification → synthesis), a step-by-step execution can produce incomplete or suboptimal results because the AI agent can’t reason about dependencies upfront. Now, complex questions like "Create a report on the top issues last quarter, group results by theme, and quantify each theme for signal strength” will feel like a breeze.

We're rapidly expanding the our AI's capabilities in this space. Next step: you'll be able to interact and and influence the plan and collaborate with AI.

Redesigned settings dialog

We redesigned the settings navigation around the actual flow of NEXT AI—connecting data, making AI your own, and then asking/getting/automating insights. Related settings are grouped more clearly in way that reflect the user's journey. And the structure now helps users understand where to configure what at a glance. The result is a more intuitive settings experience that will scale better as the product grows.

No more empty focus in chat

In chat, we now always show the number of highlights in focus. And explicitly warn users when a chat data focus is empty — calling out if there's no data to answer your question.

Search in Jobs configuration

As customers scale their use of AI Jobs (i.e. data agents), long configuration lists become hard to navigate. We added search to the Jobs configuration page so you can quickly filter and find the Job you’re looking for—making agent management faster and more scalable.

Streamlined main navigation

We merged the top navigation into the left navigation to free up space for your content. Key actions like inviting users are now always visible, the navigation can be collapsed for even more room, and folder-style items (like Recordings and Highlights) now clearly indicate that they expand/collapse.

Deep links to highlights

We now support deep links to individual highlights in clusters, chat, and everywhere. You can bookmark important moments, share an exact highlight with colleagues, and open the right context instantly.

Timestamps in the highlight dialog

Highlight detail views now show the recording date and time. This adds critical temporal context when reviewing a clip, ticket, or survey response—making it easier to relate feedback to releases or events and compare insights over time with more confidence.

Changelog

Jan 26, 2026

Human-in-the-loop in chat, GPT 5.2, Share to Lovable, Support for quant data from SFMC, Better data/range handling, Survey-level context, Upload accounts with CSV, Quicker access to quotes

Human-in-the-loop in chat (for agentic tasks)

We improved how chat decides what data to focus on for agentic tasks. Previously, it would auto-narrow the scope, which worked most of the time but sometimes picked the wrong focus. Now, when you prompt, chat proposes a focus (topic, product area, date/range, segment, etc.) and pauses so you can confirm or adjust before it runs. Chat then resumes from that point—so results are more accurate, the scope is explicit, and there’s less guesswork.

Better date filtering: calendar vs. rolling periods

Relative filters like “last month” were often read as calendar periods while the system treated them as rolling windows. We now make the distinction explicit, removing ambiguity around month/year boundaries.

Chat now runs on GPT-5.2

We upgraded chat completions to GPT-5.2 to improve recall in detail-heavy workflows. Outputs are more complete and better grounded when you ask for thorough coverage.

Chat support for quant data for Salesforce Marketing Cloud surveys

Many SFMC surveys are mostly scores and selections, with little or no open text. We now transform SFMC survey data into structured raw datasets, making quantitative responses fully queryable (totals, averages, distributions, and breakdowns by attributes like country). Teams can analyze survey metrics at scale and combine them with qualitative insights for more reliable reporting.

Quant-only SFMC responses no longer create highlights

Some SFMC responses contain only numeric scores with no comments, which can create noisy, generic highlights. We now exclude quantitative-only responses without open comments from becoming highlights—so datasets stay focused on actionable written feedback.

Survey-level context

Short answers like “I like it” are hard to interpret without context. You can now provide survey-level context (name, purpose, evaluated feature) for ingestion—giving a stronger foundation without changing the original response.

Share chats directly to Lovable

You can now share a chat response directly to Lovable to kick off a prototyping session based on the conversation—making it faster to move from customer feedback to a working product idea.

Lovable connection is an enterprise feature. Contact success@nextapp.co for access.

Import accounts via CSV

You can now upload Accounts via CSV and control account IDs as part of the import. This makes it easy to link recordings and highlights to the right accounts and keep analysis consistent. See docs for more.

… and a few improvements

Manual sharing to Microsoft Teams

Last week we added Teams for automations. Now you can also manually share highlights, clusters, and chats to Microsoft Teams.

Better control of response format in chat

Chat will no longer produce clusters or comparisons on its own—making behavior more predictable and keeping deep analysis under your control. Choose a mode for advances responses.

Faster access to highlight quotes for video/audio items

Media highlights now show the full quote alongside the audio/video in the highlight dialog, so you can scan what was said without pressing play.

Changelog

Jan 19, 2026

Microsoft Teams support for automations, better "Assess hypothesis" mode, smarter survey imports from GetFeedback & Salesforce Marketing Cloud

Microsoft Teams support for automations

We’ve added Microsoft Teams as a supported automation destination, so scheduled messages/tasks and AI-generated outputs can be posted directly into Teams channels. This brings automations to enterprise environments where Teams is the collaboration hub, and ensures insights reach people where they already work — without changing their workflow.

Improved support for country and language values for GetFeedback (Usabilla) imports

Country and language values previously came in different formats, which led to duplicates in filters and dashboards and fragmented analysis. We’ve normalized these fields to a single canonical format, aligned with other integrations, and migrated existing data to match. Country- and language-based insights are now consistent, comparable across sources, and much easier to analyze reliably over time.

Improved Salesforce Marketing Cloud field mapping

Salesforce Marketing Cloud imports previously included many raw fields that weren’t consistently mapped to meaningful concepts in NEXT AI, making analysis noisy. We’ve updated the mapping to focus on high-signal fields — correctly assigning ratings, tags, accounts, and timestamps, and normalizing attributes like age, gender, country, and collection into consistent formats. Teams now benefit from cleaner survey data that’s easier to segment, filter, and turn into insights across teams.

Better "Assess hypothesis" mode in chat

We updated "Assess hypothesis"mode to support exploratory analysis instead of forcing a pros vs. cons split. The mode now surfaces relevant evidence for the hypothesis as written—helping you understand how broadly it shows up in the dataset without a binary framing.

Claimed domains now enforce SSO-only access

If your company domain is claimed by an SSO tenant, users can now only access NEXT AI through SSO. Logging in via magic link is no longer available for claimed domains.

Previously, we allowed users with claimed domains to create separate personal/test workspaces without SSO. This is no longer possible. The result is a cleaner, safer enterprise experience: no accidental “shadow tenants,” no confusing login paths, and consistent access aligned with IT policy.

… and a few improvements

Easier access to highlight details in the Library

You can now open highlight details by clicking the highlight title in the table (instead of the thumbnail).

Clearer sharing without misleading attribution

We’ve removed the “created by” attribution from share experiences to avoid attributing an AI-generated output to the person who configured it.

Neutral trend indicators in clusters

We replaced green/red cluster trend colors with neutral indicators that simply show if mentions of a cluster are trending up or down. This prevents misleading “good/bad” interpretation and makes trends easier to read for any topic.

Changelog

Jan 12, 2026

Data retrieval error rate <5% , PDF support in data, Sticky chat context, New tools in chat, Simpler prompt bar

Data retrieval error rate now <5%

Chat is only as good as the data it can retrieve. When you’re working with large volumes of customer feedback (pretty much anything above the LLM's context window), the hard part isn’t generating an answer — it’s reliably pulling the right/relevant data from which to generate the answer.

This is where hallucinations comes from.

This week our platform hit a major milestone: data retrieval error rate is now below 5%. That means NEXT AI’s chat can find and retrieve the relevant data it needs with industry-leading accuracy. Answers are noticeably more precise, less noisy, and more useful. Better retrieval → better evidence → better answers.

PDF support

Upload PDF files to NEXT and watch it neatly extract all the structured text and tables so they’re searchable and analyzable just like any other source in NEXT. This unlocks a big new pocket of evidence: feedback that used to be “locked” in PDFs can now power analysis—helping teams spot patterns earlier, and make more predictive decisions from previously hard-to-use inputs.

PDF support is available to Enterprise customers.

Full customer quotes now in all highlight dialogs

The highlight dialog now shows the complete quote, making long highlights (survey responses, call snippets, tickets, forum posts) much easier to read and understand. To keep the highlights library clean and fast, we no longer render full quotes there—resulting in easy scanning, faster loading, and smoother scrolling on large datasets.

Sticky chat context

When you select a data source in chat (like a specific survey), that selection is now preserved across follow-up questions.

You can start by choosing a source, ask an initial question, and keep refining—without the chat silently switching/resetting context. Any new sources you add later are added to the context rather than replacing it. The chat is more predictable and consistent—follow-ups stay grounded in exactly the data you chose.

Explicit Data Source Selection in Chat

Previously, chat could implicitly have access to all available sources (surveys, G Drive, Confluence, etc.). Now, the AI is limited to the inputs you explicitly opt into for that chat. This makes it much clearer where answers are coming from, improves trust, and better aligns with enterprise expectations around data access and privacy. Conversations become more predictable, more explainable, and easier to reason about—with no hidden data pulled in behind the scenes.

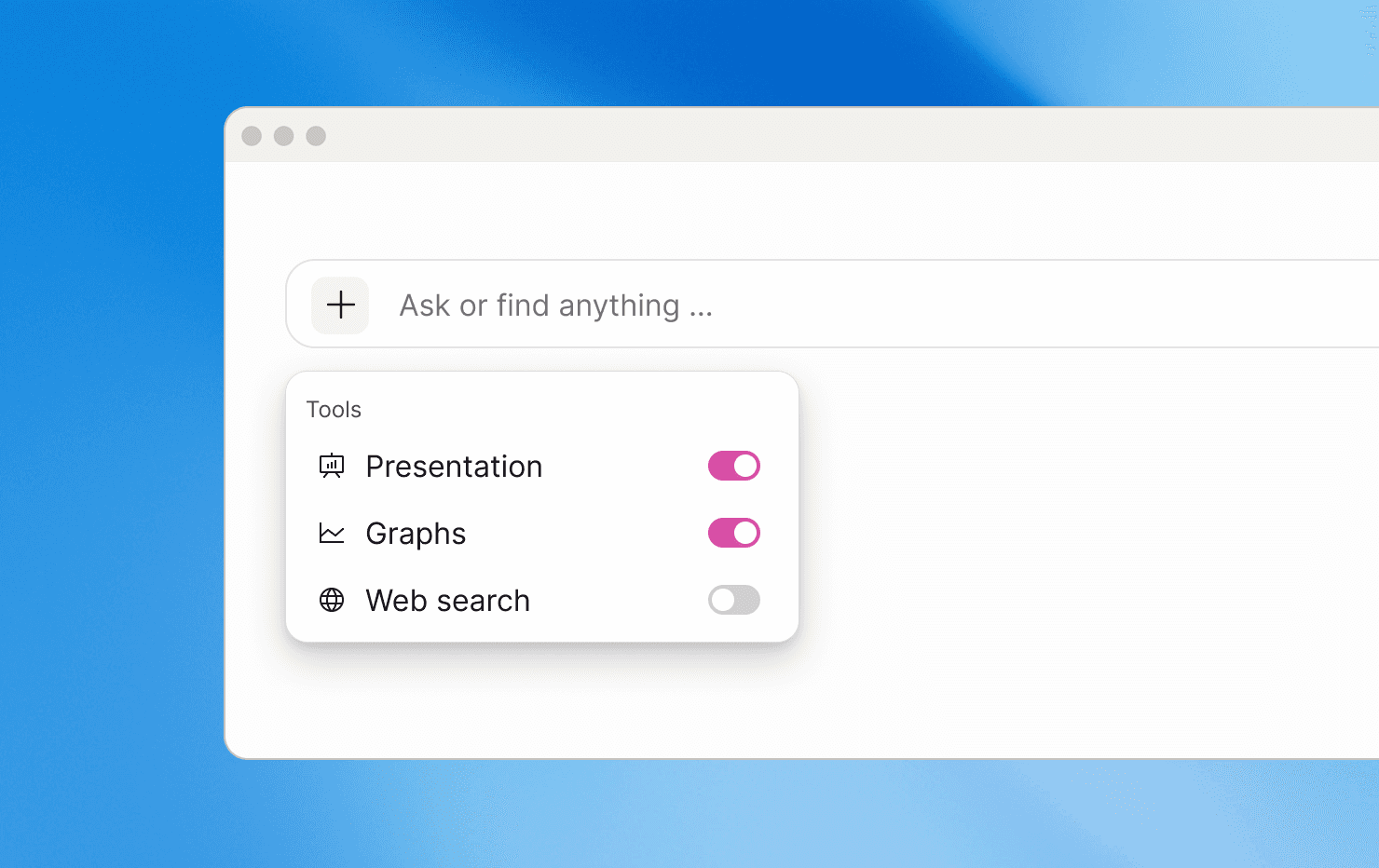

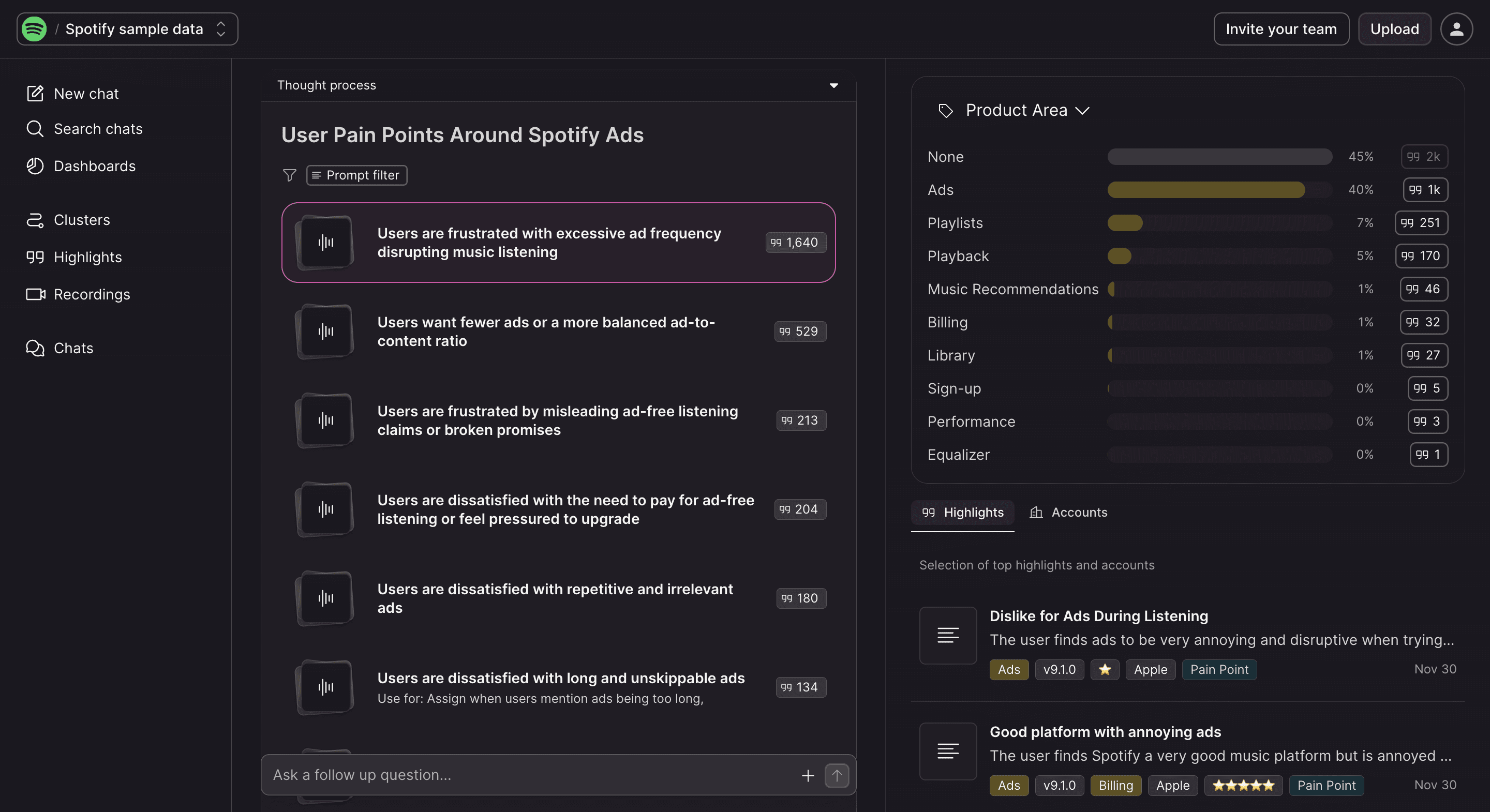

New tools in chat: Presentations & Graphs

Two new tools are now available in chat:

Presentation generates a ready-to-use slide deck from your question.

Graph plots your response on a chart, ideal for visual analysis and sharing.

When selected, the chat uses the tools to produce the output requested—no extra prompting needed. A faster path from feedback to shareable artifacts.

Simpler prompt bar

We simplified the prompt experience to keep onboarding and day-one usage easy and clear. These updates keep attention where it matters and make the starting experience more streamlined and intuitive.

Easier account search

Searching for accounts now works the way users expect: as you type, results match by prefix, so it’s much easier to quickly find the right account — even in teamspaces with thousands of accounts.

Changelog

Jan 5, 2026

Web Search in Chat, Improved Data Retrieval, Richer Imports from Intercom and Salesforce

Another week, another set of improvements - focused on precision, control, and reducing manual effort. Here’s what’s new 👇

Web Search in Chat

You can now explicitly force web search when asking a question in chat.

By default, nothing changes - the AI still decides when external information is needed. But when you turn web search on, the system will always consult external sources as part of its reasoning.

This is perfect for questions where you know up-to-date or external information is required and want full confidence that the web is included - no guesswork.

Less Manual Work for High-Quality Auto-Narrowing on Tags

Tag descriptions play a critical role in auto-narrowing - but maintaining them manually doesn’t scale.

From now on, tag descriptions are generated automatically by AI when tags are created. These descriptions capture meaning, context, and synonyms beyond the tag name itself, helping the system distinguish between similar or overlapping tags.

The result: more precise narrowing and better answers - without extra configuration.

You can still review and edit descriptions anytime in the UI.

Filter by Absolute Date Ranges, Made Easy

Filtering by date just became far more intuitive.

You can now use clear, ISO-style calendar ranges like:date:2025_12_01-2025_12_31

This makes common queries such as “all of 2024,” “Q1 2025,” or “Jan 1–Dec 5” simple and readable - no timestamp math required.

Relative filters (like “last 3 months”) continue to work as before. This update simply adds a more human-friendly option when precision matters.

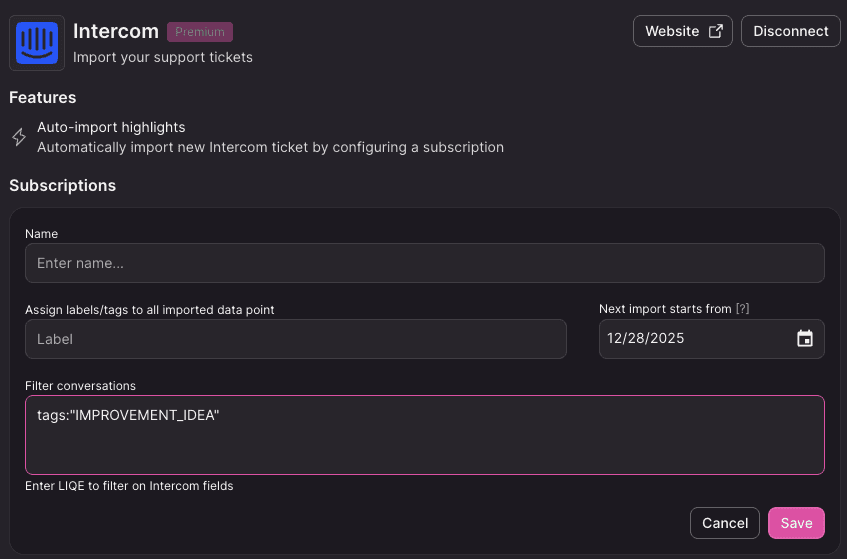

Advanced Filtering for Intercom Imports

Intercom imports now support pre-filtering conversations by tag.

Instead of importing everything, you can pull in only what matters - for example, conversations tagged with IMPROVEMENT_IDEA. The setup supports flexible LIQE filters like:tags:"IMPROVEMENT_IDEA"

The payoff: cleaner imports, less noise, and datasets that are already aligned with your goals.

Richer Survey Context in Salesforce Marketing Cloud Imports

Salesforce Marketing Cloud importsFgif now include human-readable survey question names.

By passing “pretty” question fields (e.g. q1_question_translation), NEXT automatically pairs them with their corresponding answers during import.

This gives both humans and AI much better context - leading to more accurate understanding of feedback and easier interpretation of survey data, without changing any existing endpoints or credentials.

More precision. Less manual work. Better answers.

Stay tuned - there’s more coming 🚀

Changelog

Dec 26, 2025

Ask follow-up questions in chat

This week we shipped two improvements that make exploration more powerful and analysis more trustworthy - without adding friction.

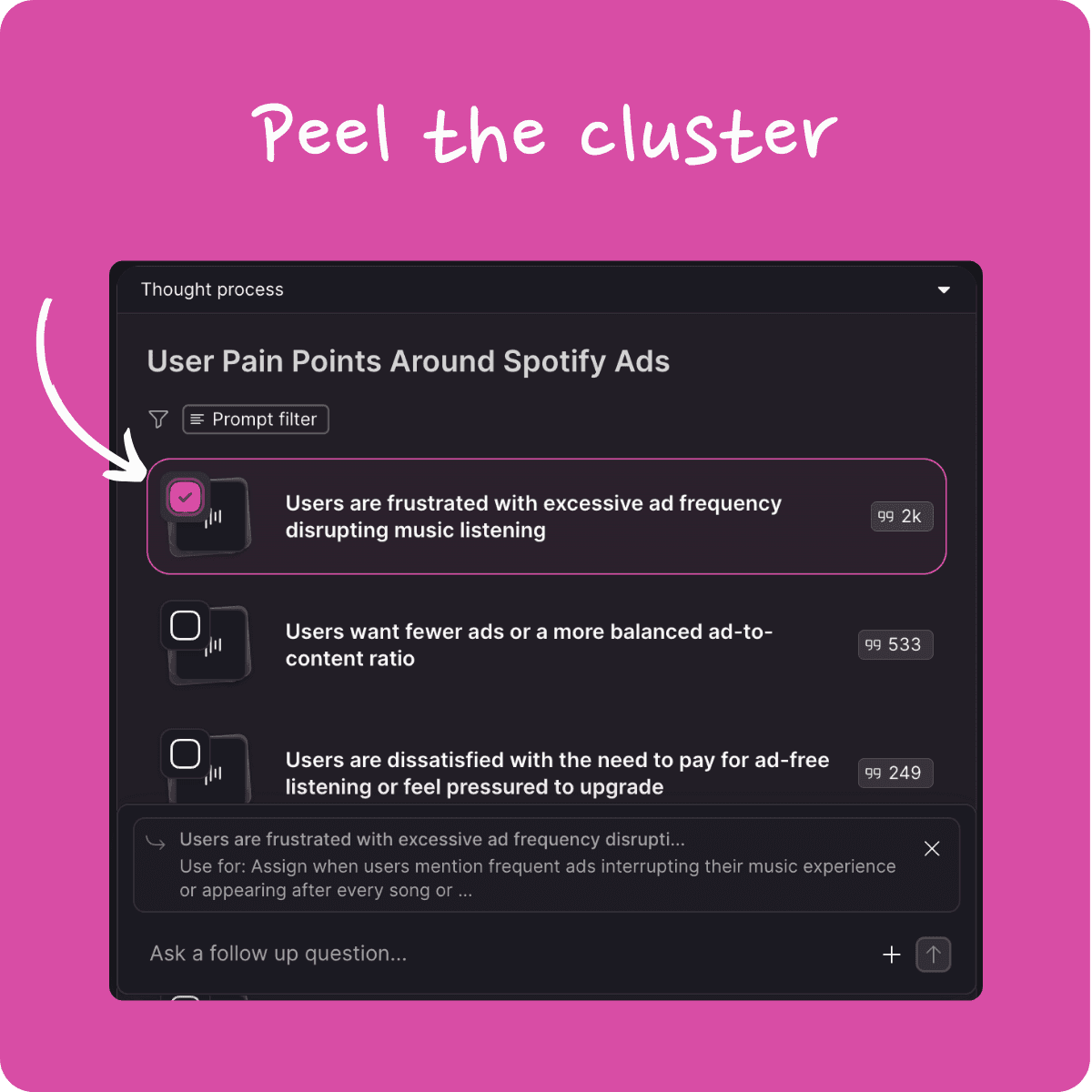

Follow-up questions on clusters in chat

Clusters in chat are no longer a dead end. You can now ask follow-up questions on one or more clusters and even generate sub-clusters to dig deeper, all while staying in the same context. This makes it easier to move from a high-level overview to detailed insights in a single flow.

Changelog

Dec 19, 2025

Research mode, Test hypothesis mode, Better support for GitHub in automations

Another week, another set of improvements designed to make your work faster, clearer, and more predictable. Here’s what shipped recently 👇

New Chat Modes for More Directed Answers 🎉

You can now guide how the AI thinks by selecting a chat mode before sending your message.

Choose between Research and cluster (to group, quantify, and explore themes) or Test hypothesis (to validate or refute assumptions using data).

Each mode is clearly explained on hover and visibly marked when active, so you always know what’s shaping the response. The result: more intentional answers, without adding complexity to the chat experience.

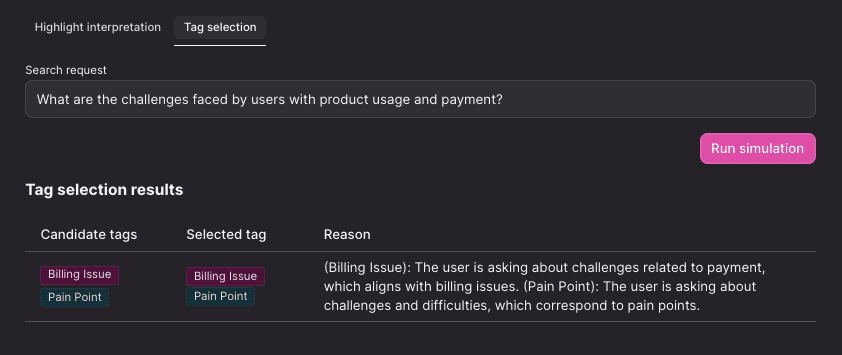

Chat Now Supports Accurate Multi-Tag Focus 🎉

The chat now automatically narrows its focus using all relevant tags - not just one. This leads to more precise insights, especially when multiple dimensions matter.

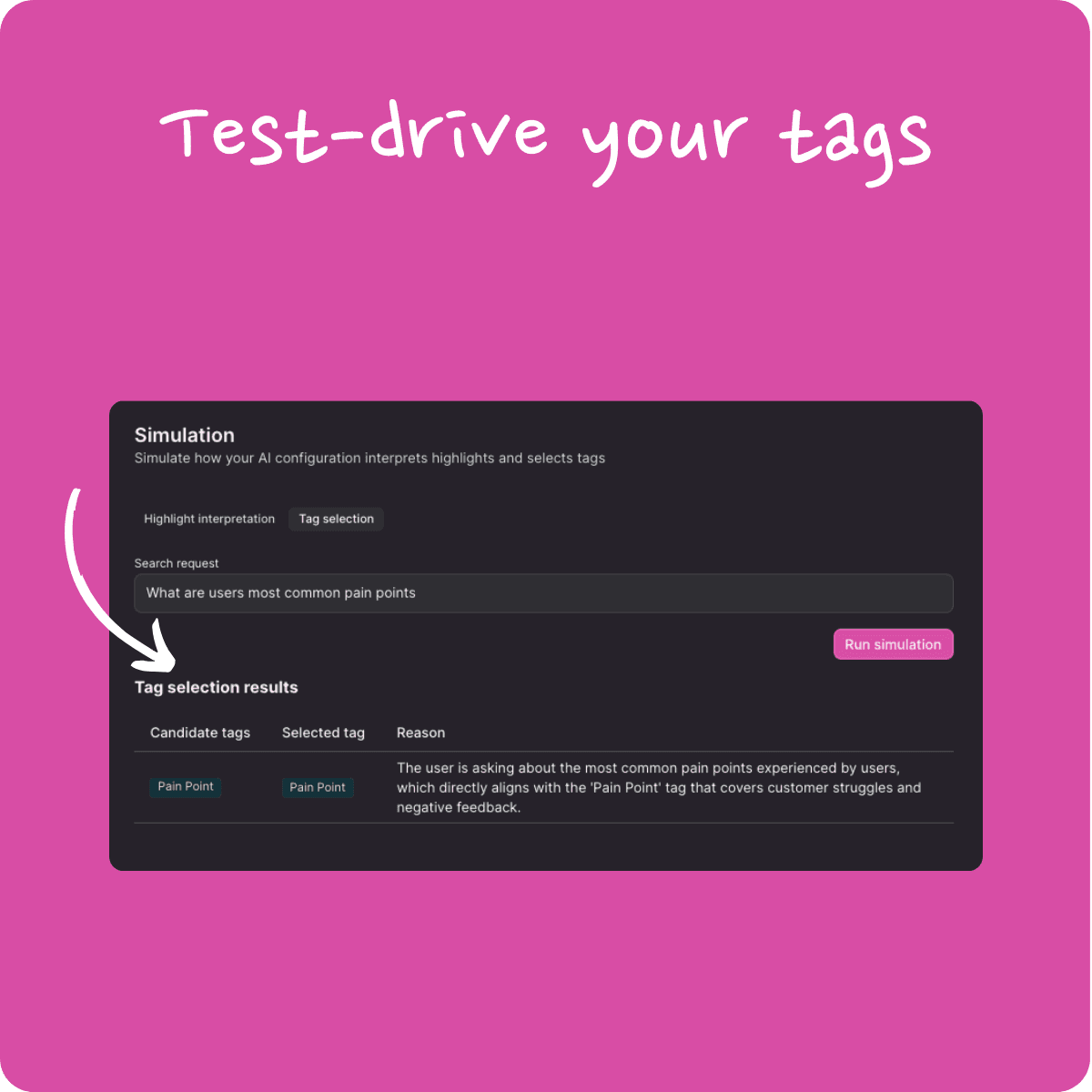

To make this transparent, you can inspect tag choices via Tag Selection Simulation and review the reasoning in a dedicated evaluation report. Better focus in, better answers out.

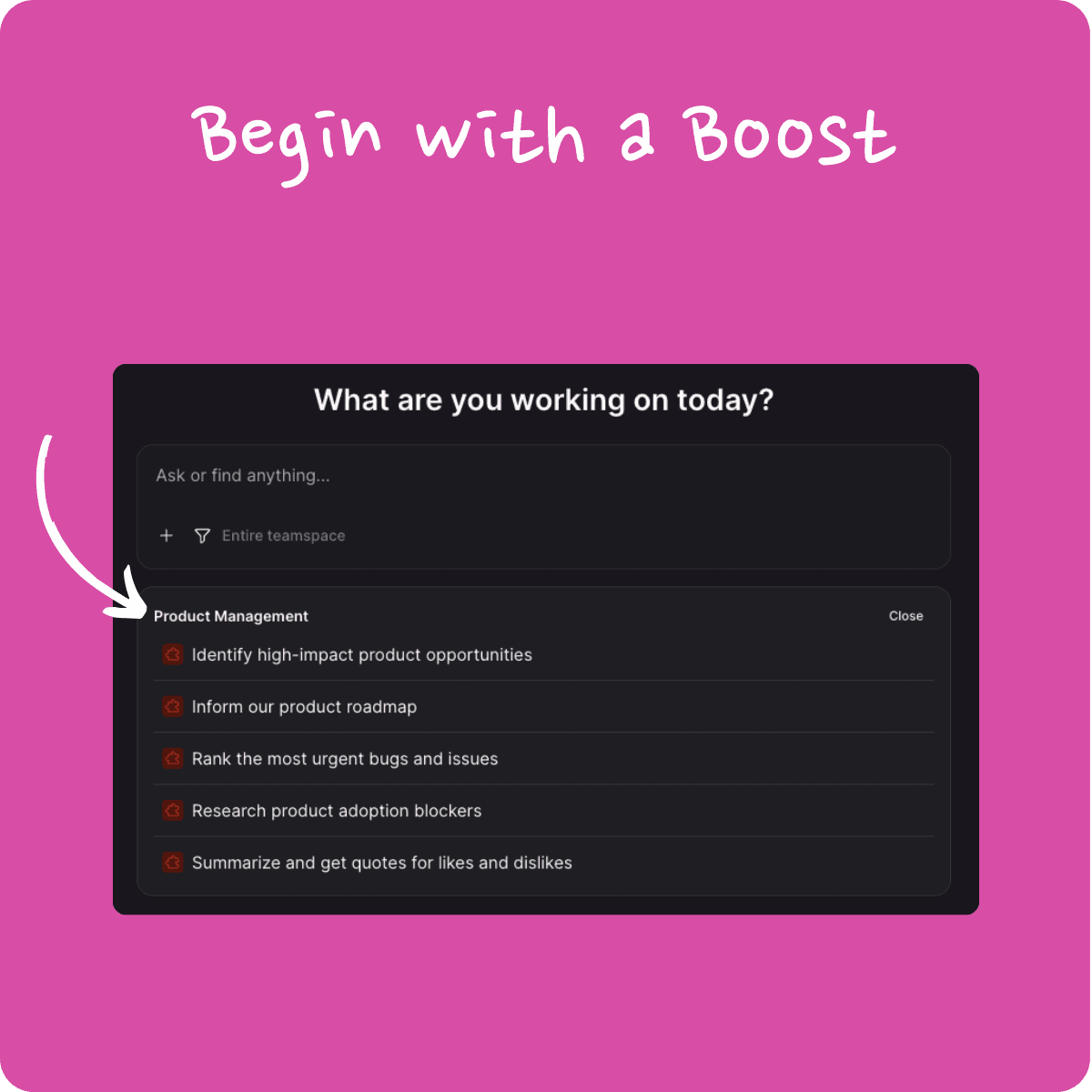

Prompt Templates on Home Now Apply Focus 🎉

Homepage prompt templates now apply both the prompt and its predefined focus in one click.

That means templates can reliably answer scoped questions - by region, time period, product, tag, or segment - without relying on auto-narrowing. The focus is visible in the prompt box, ready for review before sending, so there are no surprises.

Homepage Prompt Templates Now Available Everywhere 🎉

The default homepage prompt templates are now available across all existing teamspaces.

Previously limited to newly created spaces, these starting prompts are now consistently available to everyone - making it easier to jump into meaningful analysis from day one.

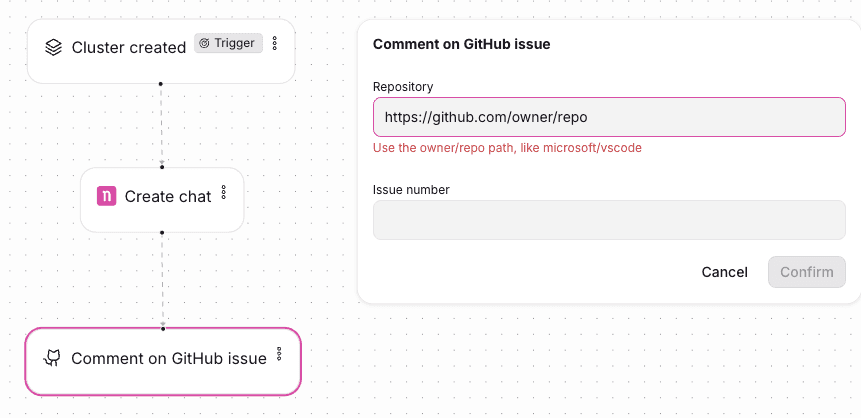

Validation for GitHub Repositories in Automations UI 🎉

Configuring GitHub-based automations just got smoother. Repository values are now validated as you type, and automations can only be saved once everything checks out.

This prevents misconfigurations early and avoids broken setups later — so automations work the way you expect, from the start.

Changelog

Dec 12, 2025

Better data interpretation in chat, Faster clustering, Better feedback in 'thinking', Integrations monitoring

Another week, another set of meaningful improvements. Here’s a quick, high-signal roundup of what’s new - focused on clarity, speed, and trust in every step of the workflow.

Tag Selection Simulation 🎉

We’ve added a Tag Simulation Mode: a safe sandbox where you can test tag descriptions, prompts, and context without touching production data. Experiment freely, see how the system would behave, and only apply changes once you’re confident. Fewer surprises, better decisions.

Highlights Are Now Facts, Not Insights 🎉

Highlights have been reframed from interpretations to literal facts. This removes assumptions and keeps outputs strictly grounded in the source material.

More neutral and consistent highlights

Cleaner inputs for clustering, tagging, and UX analysis

Faster & Smarter Clustering 🎉

Our clustering algorithm got a serious upgrade.

20–50% faster runtimes

Higher match quality with fewer false positives

Clearer “Thinking” Feedback in Chat 🎉

We replaced the old pulsating dot with a subtle animation on the status text (e.g. “Generating clusters…”). As long as it’s animating, you know the agent is actively working - making long-running steps feel transparent instead of stuck.

Much Faster Search Suggestions 🎉

Search suggestions are now up to 10× faster, especially when refining queries. Less waiting, more flow.

Email Alerts When Imports Stop 🎉

If an integration subscription becomes disabled (for example due to expired or revoked access), we now send an email immediately explaining what happened and what to do next. No more silent failures or detective work weeks later - just instant visibility and a direct link back to settings.

Goodbye Prompt-Generated Dashboards 🎉

We’ve removed the “create dashboard from a prompt” option. These dashboards often came with confusing, opaque filters. From now on, every new dashboard starts as a clean slate - no hidden logic, no mystery setup. You’re fully in control.

Changelog

Dec 5, 2025

Prompt templates, Better cluster descriptions, Pretty analytics in chat, Better file upload docs in API

A fresh week, a fresh batch of improvements - all designed to make analysis smoother, onboarding clearer, and insights more trustworthy. Let’s dive in 👇

Home page helps users craft their first prompts 🎉

Starting a new chat is now instantly intuitive.

The homepage presents organized prompt categories - from Marketing & CX to User Research - each revealing ready-to-use templates in alphabetical order.

Users can even add their own categories, making the first step into AI-powered analysis faster and less overwhelming.

More Reliable Auto-Narrowing in Chat 🎉

Focused questions now return truly focused answers.

We improved how chats select and narrow data based on tags, ensuring it looks exactly at the slice of feedback you intended - not adjacent topics.

What’s improved:

More accurate tag matching for targeted questions

Reduced over- or under-narrowing

Ongoing quality checks to maintain precision over time

When you say “only look at onboarding feedback,” the system really does.

Clearer Cluster Descriptions 🎉

Cluster descriptions now read like humans speak, not how machines think.

They remain technically accurate but are now far easier to scan, understand, and present - especially when moving from exploration to summary.

More Objective, Fact-Based Highlights 🎉

Highlights now state exactly what was said - no emotional tone, no interpretation.

This shift means more reliable insight across Labs, search, and chat, and a cleaner foundation for every downstream analysis.

Refreshed Bar Charts in Labs 🎉

Our updated charts now look calmer, cleaner, and more consistent with the interface:

Softer, neutral colors

All values visible by default

A smoother visual balance for quick interpretation

Charts that illuminate insights, not overshadow them.

Prettier graphs 🎉

Graphs introduced in chat last week have been refined visually and now blend seamlessly into the overall NEXT design - polished, readable, and delightful to use.

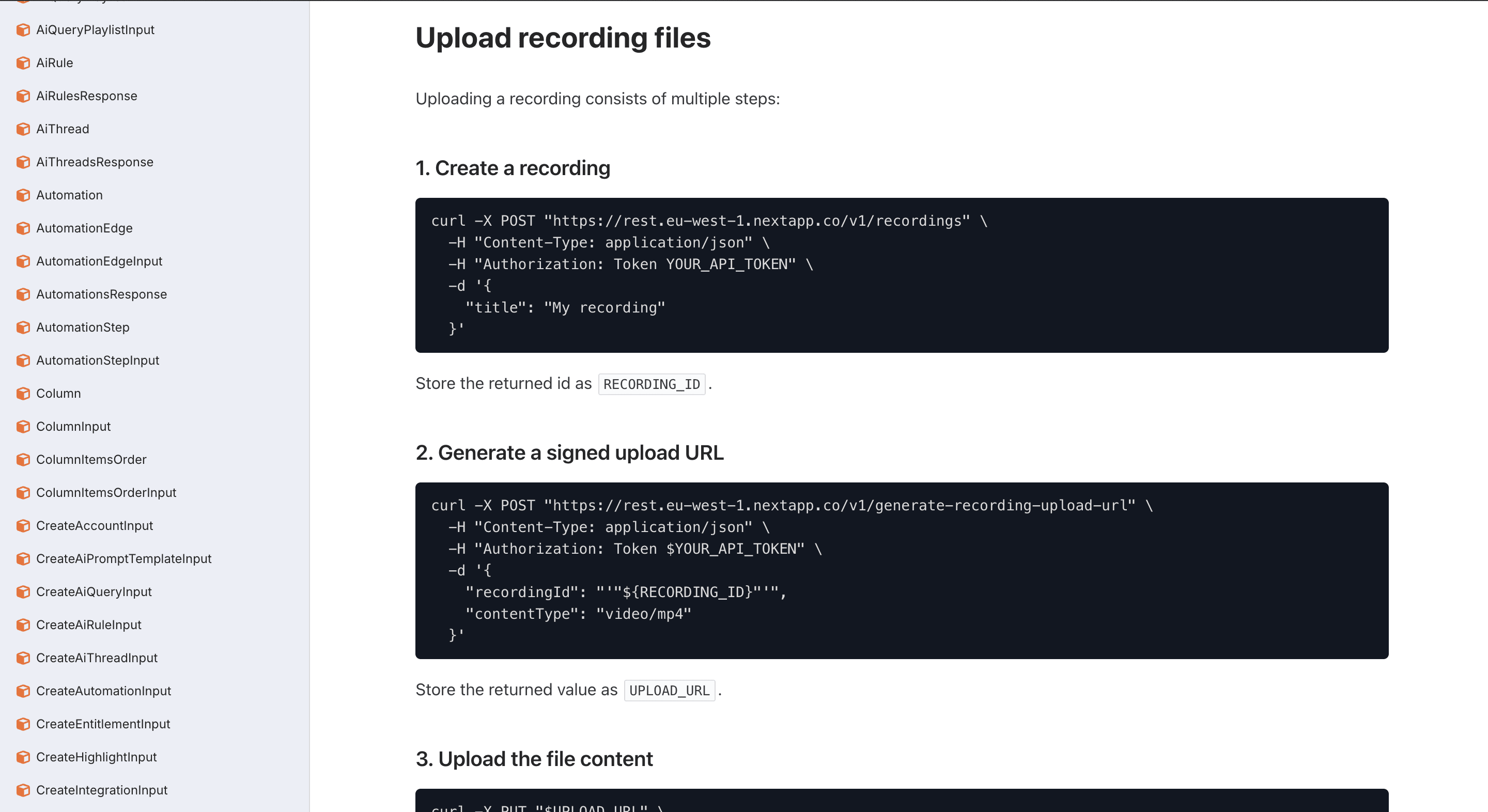

New File Upload Documentation 🎉

We’ve released streamlined, end-to-end documentation for recording uploads via REST API.

Endpoints are clearer, steps are consistent, and examples are ready to copy/paste — reducing friction for anyone programmatically sending files into NEXT.

📄 Check it out: https://developer.nextapp.co/

More improvements are already underway - stay tuned for next week’s update!

Changelog

Nov 28, 2025

Weekly updates (29 Nov '25)

Another week, another batch of improvements to help teams explore data faster, see patterns clearer, and work more confidently with sensitive information.

Labs: From Highlights to Accounts 🎉

Labs now lets you explore clusters from two complementary perspectives: individual highlights and aggregated accounts.

Dive into quotes for nuance, or switch to account view to understand which companies, regions, and segments are shaping the pattern. Qualitative depth and strategic context - unified in one flow.

Charts in Chat 🎉

Chat just got more visual. We’ve added native bar charts so comparisons and distributions are instantly understandable.

Instead of relying only on text or tables, insights now come to life directly inside the conversation.

Clearer Breakdown Dimensions in Dashboards 🎉

We’ve replaced compact pie charts with expandable bar charts.

The result: clearer comparisons, better readability, and easier exploration of every breakdown dimension — especially when dealing with detailed data sets.

Stronger Data Privacy for App Store Imports 🎉

Usernames are no longer imported from App Store and Play Store reviews.

This minimizes unnecessary personal data, supports GDPR-aligned practices, and avoids accidental AI bias or confusion caused by usernames — cleaner data in, better insights out.

Changelog

Nov 21, 2025

Weekly updates (21 Nov '25)

Another week, another set of meaningful improvements to make your work with qualitative data faster, clearer, and more scalable. Here’s what’s new.

Explore Clusters in Chat with Labs 🎉

We’ve launched Labs - a focused experience for working with clusters inside chat.

Instead of long explanations, Labs puts clusters front and center. Answers are now cluster-only, with clear signal-style strength indicators that set expectations around precision. When you want to go deeper, simply click a cluster to open the Labs side panel for detailed exploration - without cluttering your chat stream.

The result: a cleaner, more trustworthy, and more scalable way to explore insights.

Hypothesis Validation Mode is Now Live 🎉

Chat has always been great for open-ended exploration. Now, it’s also built for structured validation.

With Hypothesis Validation Mode, you can test a specific assumption and get two clear evidence streams:

Supporting evidence - statements that back up your hypothesis

Contradicting evidence - statements that challenge or refine it

This gives teams a direct, evidence-based path to validate (or invalidate) assumptions using real qualitative data.

Copy-Paste Transcripts from NEXT 🎉

You can now copy full transcripts - including speaker names and timestamps - directly from the recording page.

Just select “Copy transcript” under Transcript options and paste clean, structured text into Google Docs or Word. Perfect for UXR workflows, internal reporting, and stakeholder sharing.

No more guesswork around who said what and when.

Smarter, Cleaner AI Cluster Creation 🎉

We’ve refined our AI cluster generation to improve both relevance and granularity.

By strengthening our prompts, we now:

Enforce stricter alignment with the user’s question

Prevent off-scope themes from creeping in

Only merge clusters that are true duplicates

The result: more accurate, better-scoped, dimension-focused clusters that hold up under scrutiny.

Better Account-Based Search 🎉

You can now filter recordings by account and account metadata (industry, segment, etc.) directly from the recordings library.

We’ve also simplified account filtering across highlights. Instead of using recording.account.xxx, you can now use account.xxx everywhere - making search more consistent, especially for highlights coming from direct imports.

Finding the right data is now faster and more intuitive.

Hybrid Search for Chats: Keywords + Semantic 🎉

Chat search just got significantly more powerful.

We now combine semantic search with keyword matching in a single experience. This means you can:

Ask natural-language questions for context-driven results

Search for exact terms, names, or IDs with precision

Mix both, without switching modes

Flexible when you need context. Exact when you need control.

Refined Wootric Mapping Is Live 🎉

We’ve rolled out a fully validated and more accurate Wootric field mapping.

This delivers cleaner, richer survey imports, including:

Clear NPS/CES question prefixes in feedback text

Normalized 5-star ratings

Automatic score labels like “NPS: 9” or “CES: 4”

Expanded tagging for edition, platform, user profile, industry, reseller, UI type, and more

The result: better-structured highlights, more context, and less manual cleanup.

More to come next week - as always, we’re building fast and listening closely.

Changelog

Nov 14, 2025

Weekly updates (14 Nov '25)

Import Survey Responses from Salesforce Marketing Cloud 🎉

NEXT now connects directly with Salesforce Marketing Cloud (SFMC) to import CSAT and other survey responses - ideal for teams using SFMC as their customer feedback backbone.

This integration is completely independent from the existing Salesforce CRM connector, reflecting Marketing Cloud’s separate APIs and authentication.

Once you provide your SFMC credentials, NEXT automatically pulls in feedback from your Data Extensions, allowing you to unify insights across both CRM and Marketing Cloud for a more complete customer-understanding picture.

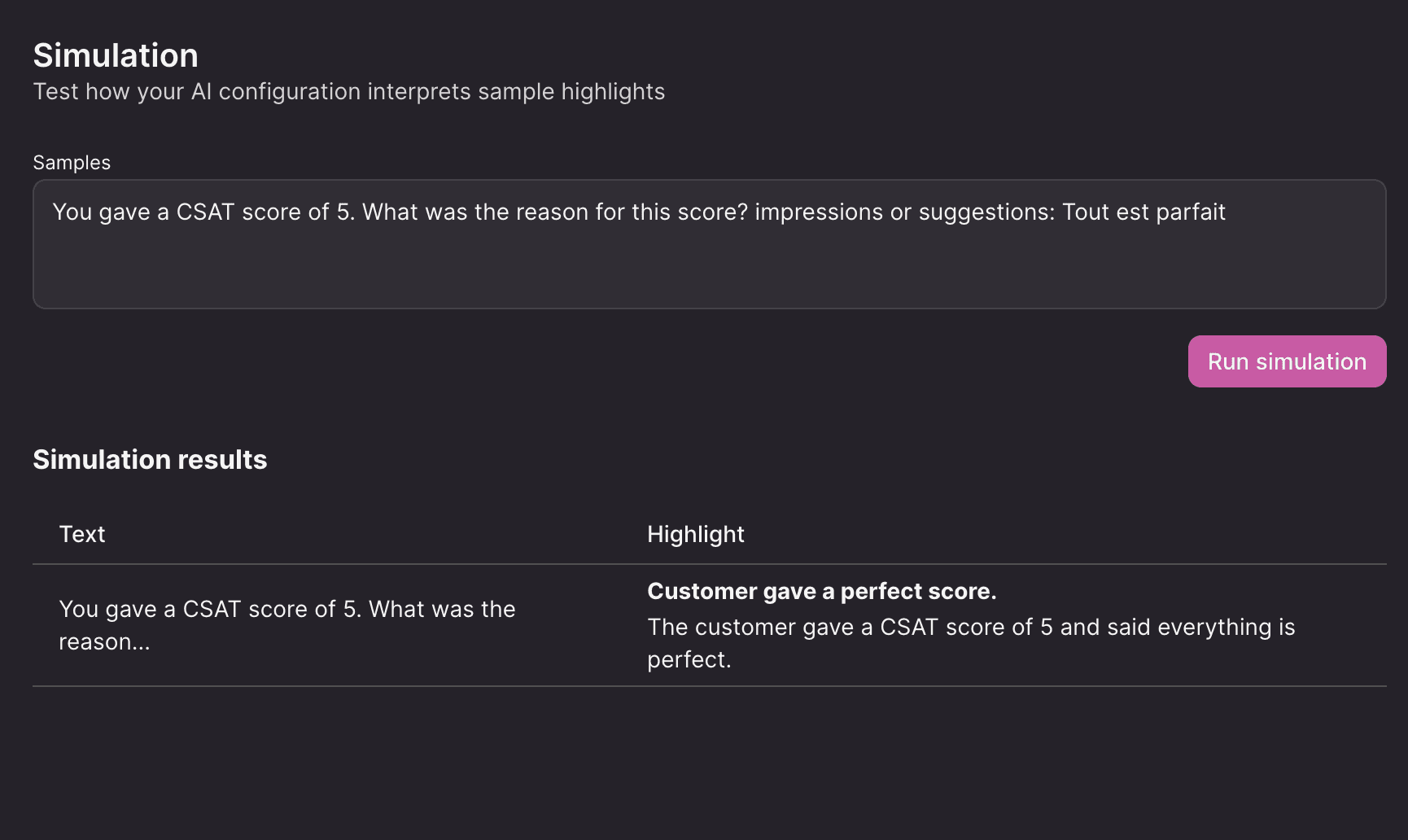

Introducing “Simulation” for AI Instructions 🎉

Configuring AI instructions just got dramatically easier.

With the new Simulation feature, you can paste a few sample texts and instantly preview how the AI would interpret them - titles, descriptions, everything.

No more test data imports or cleanup runs. Just quick, safe iteration until your setup feels perfect.

See Highlight Counts Before Starting a Job 🎉

Ever kicked off a tagging or clustering job only to realize the focus was empty? That pain is now gone.

When you set a focus, NEXT shows the exact number of highlights that match - directly in the confirmation dialog.

A small addition with a big impact: fewer mistakes, faster workflows.

Wootric Import Now Supports Multiple Survey Types 🎉

Running NPS, CES, and CSAT surveys at the same time? NEXT now handles them all in parallel.

Every response is tagged with its original score - for example “NPS: 8” or “CES: 4” - making it easy to filter and explore.

And thanks to unified 5-star conversion logic for each metric type, dashboards can now display consistent rating visualizations across all your Wootric data.

Changelog

Nov 7, 2025

Weekly updates (7 Nov '25)

It’s been an exciting week at NEXT - we’ve been busy polishing core workflows, rolling out smart automation, and making your daily experience even smoother. Here’s a quick tour of what’s new:

Smarter, Faster Accounts 🎉

Say goodbye to long load times when picking Accounts! We’ve redesigned the selector to search as you type, making it lightning-fast and scalable - whether you’re working with dozens or thousands of Accounts. It's now live in Recording details, the Recording sidebar, and the Accounts settings page.

Chats, Front and Center 🎉

Chats now take the spotlight in the left-hand navigation, with your 25 most recent threads just one click away. We’ve also added a “New chat” button right in the navigation, making it easier than ever to dive back in or start fresh.

See the Full Picture with “Others” and “None” in Chat Clusters 🎉

Cluster breakdowns in chat now include “Others” and “None” categories, just like in dashboards. That means no more guesswork when you’re only seeing the top few items - missing or low-frequency data is grouped cleanly for a clearer, more honest view.

Sharpened Chat Titles Are Live 🎉

No more messy or repeated chat names! We now generate clear, concise titles for each thread using an AI model. Titles are short, descriptive, and automatically added once the chat begins - making your list easier to scan and navigate.

Smarter Tag Colors, Less Clicks 🎉

Creating new tags just got smarter: they now inherit the color of the first tag in their group. Plus, you can set a color at the group level and apply it to all tags within with a single click. Say hello to cleaner, more consistent visuals.

Richer Usabilla Survey Imports with Custom Context Templates 🎉

Enrich imported survey comments by adding context like NPS and selected answers using a simple templating language (e.g., {{data.nps}}). This keeps highlights compact and meaningful for both humans and models.

Full docs and examples here: https://help.nextapp.co/en/articles/12539034-import-getfeedback-usabilla-feedback

Wootric Integration Is Live 🎉

You can now import NPS and CES surveys from Wootric (InMoment) directly into NEXT. Just plug in your API credentials in the integration settings, and we’ll map your survey responses into highlights, tags, and Accounts - no more manual work.

“Assistant” Is Now “Procedure” 🎉

We’ve renamed the former “Assistant” feature to “Procedure” to better reflect its purpose: guiding users through repeatable workflows. The new name makes it clearer where chat ends and structured workflows begin - setting the stage for even more powerful automation.

More improvements are already in the works. Stay tuned, and as always, thanks for being part of the journey! 💡

Changelog

Oct 31, 2025

Weekly updates (31 Oct '25)

Another week, another batch of improvements making NEXT faster, clearer, and more delightful to use. From a chat-first experience to smarter automations — here’s what’s new this week 👇

A new, chat-first homepage 🎉

NEXT’s homepage now puts conversation front and center. What was once a hybrid of dashboards and chat has been streamlined into a focused, AI-first experience that clearly communicates what NEXT stands for: an intelligent assistant that helps you discover insights through dialogue.

Dashboards are still just a click away - but Chat now takes the spotlight. Chat. Quantify. Conquer.

“Threads” are now “Chats” 🎉

We’ve renamed Threads to Chats across the platform. The change brings clarity and consistency, aligning NEXT’s language with the way users naturally talk about conversational AI. No more second-guessing - just Chat.

Chat search has a new home 🎉

Searching past chats is now simpler and more intuitive. Instead of appearing in a right-hand fly-out, Chat Search opens in the main content area, showing results where you expect them. Fly-outs remain for highlights and clusters - Chat Search now has a home of its own.

Board reorder drop indicator 🎉

Reordering clusters on boards just got smoother. You’ll now see a clear line showing exactly where your card will land when you drag and drop — making it easier to organize insights with confidence.

New Zapier Action: “Find Account” 🎉

Automation just got smarter. You can now search for existing Accounts directly from Zapier and link highlights or recordings to the right one automatically. Use the account name as the search term, set limit = 1, and take the id from the first result — it’s that easy.

Set tags for new highlights in Zapier 🎉

You can now assign tags to highlights as they’re created via Zapier. This makes it effortless to categorize incoming data, keep workflows tidy, and ensure every new highlight lands exactly where it belongs.

✨ That’s it for this week - small changes, big impact.

Changelog

Oct 24, 2025

Weekly updates (24 Oct '25)

Another week, another round of polish and improvements to make your experience smoother and more consistent across the platform. Here’s what’s new:

Date Picker Design Updated 🎉

Our date picker got a visual refresh! It now aligns perfectly with other text fields for a cleaner, more unified interface. We’ve also switched to the filled variant in integration dialogs - creating a consistent, modern look across all input elements.

View Reorder Drop Indicator 🎉

Reordering your views just got easier. A new drop indicator now shows exactly where a view will land as you drag it - making organizing your workspace more intuitive than ever.

Changelog

Oct 17, 2025

Weekly updates (17 Oct '25)

Our product team has been on fire this week — adding new analytics powers, smarter imports, and improved clarity across the platform. Here’s what’s new:

Quantify Open-Ended Survey Responses 🎉

You can now turn free-text survey answers into structured insights - directly within NEXT.

Select your survey, run the quantification, and discover what your customers really mean behind their words.

👉 Learn more in our Help Center

Chat Clusters Now Break Down by Tag Groups 🎉

Cluster analytics in chat just got more powerful. In addition to Account metadata, you can now segment clusters by tag groups such as Country, Device, User Journey phase, or Sentiment.

This makes it easier to spot nuanced trends and compare how themes differ across segments.

NEXT Now Imports Speakers, Accounts, and Tags from Modjo 🎉

When importing calls from Modjo, NEXT now brings along key context:

Speakers are included in the transcript

Accounts and tags are linked automatically

This makes imported calls richer and more searchable right out of the box.

Advanced Filtering for Modjo Imports 🎉

Fine-tune exactly which calls to bring in from Modjo using a new filter field.

You can filter by almost any Modjo or linked Salesforce field - such as account name or deal title - for ultimate control.

👉 See available filters here

CSV Import Now Flags Duplicate Highlights 🎉

No more guessing why some highlights didn’t make it in. If your CSV upload contains duplicates, NEXT now tells you which ones were skipped — so your import counts always add up.

Changelog

Oct 10, 2025

Weekly updates (10 Oct '25)

Another week, another set of powerful improvements to make your experience smoother, faster, and more insightful. Here’s what’s new at NEXT 👇

Chat with raw Usabilla survey data 🎉

Until now, users could only explore interpreted highlights extracted from Usabilla surveys. You can now go straight to the source — simply select the Usabilla survey in the prompt box and chat directly with the raw feedback data. Perfect for diving deeper into nuances that highlights alone can’t capture.

Smarter, scalable Slack channel selector 🎉

Large Slack workspaces? No problem. The channel picker now dynamically loads channels based on your search input — making it lightning-fast even for organizations with thousands of channels and users.

Richer metadata in Usabilla imports 🎉

We’ve supercharged our Usabilla integration to bring in more context and structure automatically.

New additions include:

Mood ratings imported as both numeric scores and visual tags (⭐️⭐️⭐️⭐️⭐️)

Platform detection from user agents (e.g., Mobile, Desktop)

This means cleaner segmentation and more actionable insights straight from your feedback data.

Prettier CSV import notification emails 🎉

Our CSV import notification emails just got a makeover. They now match the clean, friendly design of the rest of our communication — no more raw technical dumps, just clear and polished updates when your imports finish processing.

Changelog

Oct 3, 2025

Weekly updates (3 Oct '25)

We’re back with another round of exciting improvements that make NEXT smarter, faster, and more insightful than ever. Here’s what’s new this week 👇

AI Chat now offers precise quantification and account breakdowns 🎉

NEXT’s AI Chat just became a true powerhouse for insight exploration - it now provides the same high-quality quantification as Clustering Jobs, plus detailed account breakdowns for each cluster.

Until now, the Chat offered quick estimates of how many highlights supported each theme. That’s changing. You can now trigger precise quantification directly within the Chat. Simply expand the clusters widget and hit [Run quantification].

Behind the scenes, NEXT runs the same models and logic as Clustering Jobs, ensuring accurate and consistent numbers right where you’re exploring insights. No need to create a separate Clustering Job for ad-hoc analysis - your Chat can now handle it all.

And once your clusters are quantified, you can dig deeper into what drives them. Click on any cluster to see a breakdown by account dimensions, instantly revealing which accounts or segments contribute most to each theme.

Smarter Thread focus by tag 🎉

Threads just got sharper. Our AI that determines the most relevant tag now combines various algorithms for candidate selection and the final pick.

The result? Noticeably smarter and more accurate tagging - especially in nuanced cases.

Assign tags to all highlights in a Job’s focus 🎉

You can now assign tags to all highlights within a Job’s focus. This makes it easy to tag entire sets of data in one go - for example, marking all highlights from an experiment with a specific account during a given week. More flexibility. Less manual tagging.

Match tags in Keyword Tagging Jobs by account ID 🎉

Keyword Tagging Jobs just became more powerful.

You can now match tags based on account ID using the new syntax:recording.account:ACCOUNT_ID

Perfect for tagging variants of A/B tests or experiments that run under different accounts.

Uberall integration adds emoji rating tags 🎉

Our Uberall integration now brings in rating fields as emoji tags - giving instant visual cues on how users rated their experiences (e.g. ⭐️⭐️⭐️⭐️⭐️ on Google Maps).

A small touch that makes your insights feel more human.

Changelog

Sep 26, 2025

Weekly updates (26 Sep '25)

Another week, another round of improvements to make NEXT smarter, easier, and more powerful. Here’s what’s new:

Import reviews from Uberall 🎉

You can now bring reviews collected via Uberall directly into NEXT. This means social media and app store feedback (like Google Maps, Waze, and more) can flow seamlessly into your workspace for deeper analysis.

Connecting to our hosted MCP server made easy 🎉

We’ve launched a clean endpoint at mcp.eu-west-1.nextapp.co/stream for connecting MCP clients to NEXT.

A new Help Center guide walks you through authentication with teamspace tokens, supported transport, and when self-hosting may be the better fit.

AI message usage tracking 🎉

Transparency for your billing: you can now see exactly how many AI queries your team has used during the billing period.

Changelog

Sep 19, 2025

Weekly updates (19 Sep '25)

Another week, another round of improvements that make our platform more powerful and user-friendly. Here’s what’s new:

Billing now aligned with contracts 🎉

We’ve reworked our billing system to perfectly match what contracts define. Instead of tracking transcribe minutes, we now measure recording minutes and non-media items - just as contracts specify.

Usage is visible directly on the Billing page

Default alerts now trigger on these dimensions

Access is automatically blocked once limits are exceeded

Every tenant is set up on starter/grow/scale plans, with custom plans applied where relevant

This makes billing clearer, more transparent, and easier to manage.

Lightweight previews in the Library 🎉

The Highlights Library now feels much lighter and easier to browse. Instead of showing long text previews, highlights appear in a compact form so you can focus on the essentials. If you do need the full details, you’ll still find them in the cluster dialog.

Documentation updates 🎉

We’ve expanded our Help Center with two new guides:

Changelog

Sep 12, 2025

Weekly updates (12 Sep '25)

We’re back with another round of improvements that make your experience smoother, smarter, and more transparent. Here’s what’s new this week:

Budget alerts to stay in control 🎉

No more surprises when credits run out! Users can now set up budget alerts and receive notifications whenever they cross a usage threshold. This way, it’s easier to stay on top of consumption and plan ahead with confidence.

Transparent usage on the billing page 🎉

The billing page now displays the current usage of all features included in a plan. This gives users a clear overview of how features are being used - all in one place.

Smarter “Go deeper” suggestions 🎉

Follow-up prompts now include the full cluster context. This ensures Threads generate more relevant and informed responses, making deeper explorations more powerful and accurate.

Unified email layouts 🎉

All notification emails now follow a single, consistent layout. This upgrade brings visual harmony across communications and makes important updates easier to recognize at a glance.

Changelog

Sep 5, 2025

Weekly updates (05 Sep '25)

We’ve been hard at work making NEXT smarter, faster, and easier to use. Here’s what’s new this week:

Configure AI prompts directly in Automations 🎉

No more jumping back and forth between AI Templates and the Automation builder! You can now configure your AI prompt and search filter directly in the AI Thread step of your automation.

This makes adjusting prompts faster, smoother, and much less cumbersome - giving you more control right where you need it.

Smarter, more accurate tagging 🎉

We’ve fine-tuned our algorithms to significantly improve automatic tagging of highlights, especially for complex job descriptions.

In testing, we’ve seen up to an 82% reduction in tagging errors, making your data cleaner and insights even more reliable.

Changelog

Aug 29, 2025

Weekly updates (29 Aug '25)

Settings indicate now which tags are assigned by Jobs 🎉

Tags can come from different sources — manual assignments, imports, or automated Jobs. To make this clearer, the Tags Settings page now shows which tags are managed by Jobs using dedicated icons: ✨ for AI Jobs and 🔍 for Keyword tags, matching what you see in Job settings.

Highlight Search suggests now the tag_or option 🎉

Searching across multiple tags just got easier! The Highlight Search bar now suggests the tag_or option, allowing you to combine tags effortlessly when refining your results.

Threads more predictably narrow down on tags and dates 🎉

We’ve revamped how Threads focus on your filters. Threads now handle tag and date narrowing more reliably, avoiding those frustrating moments when your prompt would return zero results.

Changelog

Aug 22, 2025

Weekly update (22 Aug '25)

We’ve been busy improving NEXT to make your experience faster, smarter, and more delightful. Here’s what’s new this week:

User-Selectable Data Sources 🎉

You can now choose exactly which sources to query in AI Threads! Instead of scanning all connected repositories, you can direct NEXT to focus on Confluence, Google Drive, or both - improving relevance, performance, and resilience to rate limits.

Faster Suggest Search 🎉

Suggestions now load significantly faster as you type, making it easier than ever to find what you’re looking for.

CSV Highlight Upload Supports More File Encodings 🎉

Mass-uploading highlights just got easier! NEXT now automatically detects common file encodings, such as ISO-8859-1, so characters like umlauts are imported correctly - no more corrupted data when working with non-UTF-8 CSV files.

Smarter Gong Imports 🎉

We’ve added two powerful new options for Gong integrations:

Filter by call quality: Only import calls where there’s at least one external participant with a job title using the

hasExternalPartyWithTitle:trueLIQE filter.Limit imports to specific folders: Manually select which Gong folders to sync, giving you full control over which calls make it into NEXT.

Cleaner AI Threads with Feedback 🎉

We’ve streamlined the AI Thread experience:

Direct feedback on AI responses: Mark answers as good or bad so we can continuously improve NEXT’s AI logic.

Simpler thread actions: We’ve removed underused buttons, making it easier to find the essentials like “Share” and “Try again”.

Copy AI Thread Content with Highlight Links 🎉

When copying AI Thread messages, NEXT now includes deeplinks to any highlights mentioned in the response — making it effortless to share context across apps.

Changelog

Aug 15, 2025

Weekly updates (15 Aug '25)

Improved Thread Sharing Experience 🎉

Sharing AI responses just got better! Previously, our sharing flow was built for video clips, which left awkward gaps when sharing text-only AI responses. Now, AI responses can be shared purely as text - making them cleaner, easier to read, and more engaging.

Import Jira Custom Fields 🎉

You can now bring even more context into your highlights from Jira. Configure any custom fields - like “Design Rating” or “Impact Score” - to be imported as highlight tags. Just open your subscription in Integration Settings and add the fields you need.

Advanced Filtering for Gong Imports 🎉

Fine-tune exactly which Gong calls make it into NEXT. With the new LIQE query support, you can filter on any Gong field - perfect for importing only the calls that match your CRM data or other criteria. Learn more in our Help Center.

Limit Khoros Imports by Board 🎉

Focus on the discussions that matter most. You can now restrict Khoros imports to specific boards, ensuring you only bring in the conversations relevant to your analysis.

Changelog

Aug 8, 2025

Weekly updates (8 Aug '25)

We’ve been busy making NEXT even more powerful and flexible. Here’s what’s new this week.

Import transcripts from Gong 🎉

You can now bring both transcripts and videos from Gong directly into NEXT, where they’re merged and ready for processing. Fine-tune your imports by setting a minimum call duration to skip ultra-short conversations, or sample your dataset (e.g., import just 5% of calls).

Note: Gong transcripts are slightly less granular than NEXT’s native transcripts, so word/video matching may be off by a few milliseconds.

Import conversations from Khoros forums 🎉

NEXT can now automatically pull in conversations from Khoros forums, making it easier to capture valuable feedback from community discussions and analyze it alongside all your other data sources.

Usabilla integration now supports campaigns and widgets 🎉

We’ve expanded our Usabilla integration to cover all collection methods - including web & app campaigns, widgets, and email campaigns. This means every piece of Usabilla feedback, from in-page sliders to mobile surveys, can now be analyzed together with the rest of your feedback data.

Import sample items 🎉

When testing your import setup, you can now choose to bring in only a handful of sample items - avoiding the accidental import of thousands of recordings. Once you’re satisfied, you can either trigger a full import manually or let the automated process take over.

Changelog

Aug 1, 2025

Weekly updates (1 Aug '25)

Here’s what shipped this week - short, sweet, and packed with power-ups.

A full Automation engine lands in NEXT 🎉

Automations just leveled up from simple trigger→action rules to multi-step workflows. Chain multiple triggers and actions, design flows visually, and pass data between steps with variables.

A few ideas to try:

Weekly digest: time-based trigger → AI Query → Send email

GitHub triage: “Issue created” trigger → AI Query → Comment on issue

Natural-language date focus in Threads 🎉

Go beyond fixed filters. Ask Threads for “Month of Oct,” “Last 10 days,” or “Q3 ’24,” and it’ll focus precisely on that timeframe - no manual fiddling required.

CSV upload API: massively faster & more scalable 🎉

Bulk-importing highlights now flies - even at thousands or tens of thousands of rows.

Heads-up: we aligned CSV column names with the API (e.g., accountName → account_name). Check the docs for the full list of accepted fields: https://help.nextapp.co/en/articles/9996257-mass-upload-highlights-csv

Mopinion integration: access raw quantitative data 🎉

Alongside qualitative insights, you can now pull the raw responses to multi-choice questions from Mopinion reports - directly inside AI Threads - for richer, mixed-methods analysis.

Front integration: delayed imports & cleaner threads 🎉

Set a grace period before archived conversations are imported from Front, so reopens don’t slip through. We also stop importing Front comments to keep internal notes separate from user feedback.

That’s a wrap for this week - more improvements are already in the works. If you try any of these, we’d love to hear how they help your workflow!

Changelog

Jul 25, 2025

Weekly updates (25 Jul '25)

Sharper Feedback Type Taxonomy 🎉

New teamspaces now launch with just five laser‑focused tags instead of seven overlapping ones. Every incoming highlight lands in the right bucket instantly, so your team can spot patterns (and act on them) faster than ever.

Instant Text Previews in Cluster Dialog 🎉

No video or audio in a cluster? No problem. The dialog now shows featured text highlights on the spot, giving you a quick, scannable feel for what users said - zero extra clicks required.

AI Clusters Speak Your Team’s Language 🎉

Set your preferred language once in Settings → AI, and every new cluster will be generated in that language automatically. Research insights now feel native from the first glance.

Changelog

Jul 18, 2025

Weekly updates (18 Jul '25)

Upload files directly to your Thread 🎉

Bring your own data! You can now drop files - anything from CRM exports to policy PDFs - straight into a Thread. NEXT automatically taps those documents when crafting answers, so you can blend private insights with tenant‑wide knowledge on the fly.

Connect Google Drive folders for always‑fresh context 🎉

Skip the manual uploads and point NEXT at a Drive folder instead. Once connected in Settings → Integrations, every doc inside (brand guidelines, research decks, you name it) becomes on‑demand fuel for your Threads.

Dive deeper into cluster insights 🎉

Click the new magnifier icon on any cluster chart to expand from the top five groups to the full set. The enlarged view surfaces every cluster plus quick-hit examples, so you can validate themes without leaving the page.

Smarter highlight search with OR suggestions 🎉

Power users love the tag_or: filter, and now our autocomplete does too. The moment you start an OR query, search suggestions flip to OR logic - making complex highlight hunts a breeze.

Lightning‑fast integration search 🎉

With dozens of integrations (and counting), scrolling was yesterday’s news. Start typing an integration name and the list filters in real time, getting you from “where is it?” to “connected” in seconds.

Translate video transcripts on the fly 🎉

Record in Spanish, share in English - no extra steps. Our upgraded model transcribes in the speaker’s language and delivers an instant translation into any language you pick under Teamspace → Translation Settings. Think native‑quality captions, minus the manual effort.

Stay tuned - more magic is on the way!

Changelog

Jul 11, 2025

Weekly updates (11 Jul '25)

Here's what's new in NEXT this week - powerful improvements to help you get even more out of your AI-driven workflows!

Assistants are now more process oriented 🎉

Previously, configuring assistants was flexible but lacked guidance, causing mixed outcomes. Now, we've baked in best practices by letting you define precise AI actions for specific scenarios. Your assistants just got smarter - and easier to manage.

Users can now triangulate their NEXT data with the web 🎉

AI Threads have expanded their capabilities! If your question requires external context, NEXT seamlessly fetches public data from the web - from forums, industry reports, and benchmarks - to provide comprehensive answers. Level up your insights by combining your data with the world's knowledge.

Threads can now pull data from Confluence 🎉

Say hello to our new Atlassian integration! AI Threads can dynamically search and incorporate Confluence pages alongside Jira issues. Accessing internal knowledge bases and tickets just became effortless, keeping your entire team's information seamlessly connected.

Users can now abort agent execution 🎉

Ever felt your agent was heading off-course? No problem! With our new stop button, instantly pause execution to refine or add context before resuming. Stay in control, every step of the way.

Recordings now support speaker-level insights 🎉

We've supercharged recording analysis by adding rich, speaker-level context:

Configure job titles per speaker: Instantly understand who said what, in the context of their organizational role.

Speaker context in highlights: Easily track the origin of every highlight with clear speaker labeling, both in the highlight library and detailed dialogs.

Advanced tagging by speaker attributes: Use advanced queries (

contributor_name:xxx,contributor_title:xxx) to assign personas automatically and gain deeper insights from interviews.

Salesforce integration supports server-to-server authentication 🎉

Integrating Salesforce just became simpler and more secure! NEXT now supports Salesforce's recommended OAuth Client Credentials flow, enabling seamless server-to-server integration. Streamline your Salesforce workflow without user-level complications.

Import reviews directly from Google Play 🎉

Stay ahead of the curve by automatically importing user reviews from Google Play. Instantly capture and analyze Android user feedback right within NEXT, keeping your team tuned in to your users’ experiences.

Stay tuned for more exciting updates next week - happy exploring!

Changelog

Jul 4, 2025

Weekly updates (4 Jul '25)

Here’s a quick roundup of what’s new this week:

Configure Scheduled Automation Run Time 🎉

You can now pick the exact day and hour for each scheduled automation. Gone are the days of “daily at some mysterious hour”—you’re in full control down to the minute.

Jobs Can Now Focus on Account Fields 🎉

Jobs can be tailored to surface highlights based on any account field. Need to know why certain accounts churned, or only tag feedback from UK-based profiles? This update has you covered.

See Type of Tagging in the Overview 🎉

We’ve added a clear “Tagging Type” column to your job list, so you can instantly tell which are search-based and which use AI. Plus, you can now re-run search tagging jobs just like their AI counterparts.

NEXT Imports Rating + Hidden Fields from Typeform 🎉

Our Typeform integration just got more powerful: free-text and multi-choice answers are joined by ratings and hidden fields in your imports, giving you fuller context right away.

Changelog

Jun 27, 2025

Weekly updates (27 Jun '25)

A fresh batch of improvements just landed! Here’s what our team shipped over the past week to make your workflow even smoother.

Highlights at a Glance 🎉

The cluster dialog now features a highlights chart, giving you an instant visual overview - even when you’re working with clusters packed with thousands of highlights.

Threads Just a Click Away 🎉

Finding ongoing conversations is easier than ever. “Search threads” has returned to the main navigation, so you can jump straight to any existing thread from anywhere in the app.

Ratings in CSV Uploads 🎉

Bulk imports now accept a rating column. Add star ratings directly in your CSV to capture user sentiment without relying on integrations.

Snappier Library Browsing 🎉

Highlights, recordings, and clusters now load noticeably faster - especially on repeat visits - so you can zip through your library without the wait.

Enjoy the new goodies, and stay tuned for next week’s drop!

Changelog

Jun 20, 2025

Weekly updates (20 Jun '25)

Here's what's new at NEXT this week:

NEXT has a new home experience 🎉

Say goodbye to the Threads landing page! Users now land on a sleek, informative home page that immediately shows which data has been loaded into their teamspace. Plus, users can kick off conversations instantly by asking direct questions and easily refining their scope for even more precise Threads.

Start Threads directly from Clusters and Dashboards 🎉

Threads just got even more integrated! Now, users can instantly dive deeper by starting new Threads directly within the cluster dialog and from dashboards. This means your insights and conversations are always one click away.

NEXT can now handle an unlimited amount of Threads 🎉

We’re thrilled that users loved Threads beyond our wildest dreams - so much that we needed to scale up! The platform and the UI are now optimized to effortlessly handle nearly unlimited Threads. The Thread detail page is streamlined to focus solely on the current Thread, significantly boosting load speeds. Need a previous Thread? Just tap the search icon for instant access.

Additionally, Thread history is now directly accessible from the AI prompt box, keeping your workflow seamless.

Import Typeform surveys directly into NEXT 🎉

Bring your survey data directly into NEXT! With our new Typeform integration, your team can effortlessly import and analyze survey responses, turning valuable feedback into actionable insights.

New filter: Highlights imported in the last 24h 🎉

Setting up daily digests just got easier! Users can now filter highlights based on their import date - not just the recorded date. This precise filter ensures your daily digests capture exactly what's fresh without any duplicates or missed data.

UX improvements 🎉

Clearer Thread Visualization: Threads now transparently indicate when they’re processing or actively generating responses, making the thinking process much clearer step-by-step.

Smoother Cluster Loading: No more guessing if a cluster is loading—clicking now immediately opens the cluster dialog with a clear loading indication, providing a noticeably smoother user experience.

Stay tuned for more next week!

Changelog

Jun 13, 2025

Weekly update (13 Jun '25)

Here’s what’s new this week - all designed to make your experience faster, clearer, and just plain smarter. Let’s dive in.

Follow Along as NEXT AI Thinks 🎉

You can now see the thinking process behind every Thread. This adds transparency and helps users understand why NEXT AI answers the way it does. It’s also a great way to refine your next prompt - especially when there’s hidden context the AI might not know yet.

Dashboards Are Now Truly Dashboards 🎉

We’ve reimagined dashboards to reflect how users actually work. Instead of answering a single question, dashboards now provide a broader overview of a focus area - and suggest the right prompts to dive deeper.

The interface is much calmer and easier to scan. No more visual overload - just what you need, when you need it. For this, we've trimmed down the dashboard lister section to make dashboards easier to navigate and faster to load:

Only clusters shown - no more endless highlights

Removed filters and sorting - because no one was using them

Simplified cluster cards - now without distracting analytics

The result? A cleaner, faster way to spot what matters most.

Smarter Focus Picking for Threads 🎉

Threads now rely on high-quality, AI-assigned tags to define their scope. This avoids noisy, low-quality tags from imports and leads to more relevant answers.

Bonus: The agent also got better at picking the right tools for each job - especially when generating themes.

Better Handling of Offensive Feedback 🎉

Not all user feedback is polite - but it’s still valuable. We’ve relaxed our input filters to process survey responses and reviews even if they include strong language. This gives you a more complete picture of what users really think.

Bonus: Our AI processing also got faster across the board.

Users Can Now Import Feedback from Usabilla Forms 🎉

In addition to Usabilla buttons, you can now import feedback from Usabilla forms as well. More coverage, less hassle.

Clusters Suggest Now Prompts to Dive Deeper 🎉

Each cluster now comes with tailored prompt suggestions. Just click to kick off a new Thread and explore the details with NEXT AI.

Changelog

Jun 6, 2025

Weekly updates (6 Jun '25)

It’s been a productive week at NEXT - our team shipped several powerful improvements to make your workflows smoother, smarter, and more precise. Here’s what’s new:

Match highlights to a single cluster 🎉

You no longer need to regenerate all clusters just to tweak one. From the UI, you can now rematch highlights against a single cluster - perfect for fine-tuning themes without starting from scratch.

Threads got smarter about choosing data 🎉

Threads now interpret your intent more accurately:

When you select a specific highlight, the AI focuses only on that, avoiding unrelated data.

If you don’t ask for a date filter, we won’t add one.

Plus: Threads keep it concise, limiting inline evidence to just 3 annotations per fact.

Smarter, multi-tool Threads 🎉

Threads can now generate themes and trigger multiple tools in one go:

They auto-pull existing clusters (or create new ones) to enrich responses.

They select the best tools more accurately — for responses that are both broader and deeper.

Limit Tagging and Clustering Jobs by source 🎉

You can now scope your Tagging or Clustering Jobs to a specific data source. Whether it's HubSpot support calls or a targeted Qualtrics survey - tailor your analysis exactly to where the data came from.

Let us know what you think - and stay tuned for next week’s batch of updates!

Changelog

May 30, 2025

Weekly updates (30 May '25)

Here’s what shipped this week - each one designed to shave hours off your workflow and make insight-gathering feel like magic.

Generate PowerPoint presentations with AI 🎉

Craving a client-ready deck in minutes? Simply tell NEXT AI what story you want to tell and voilà—a PowerPoint file lands in your Thread, complete with slides and a logical narrative flow.

Front ticket import & auto-analysis 🎉

We’ve added a native connector to Front, the shared-inbox platform. Pull every customer exchange straight into NEXT, where AI tags sentiment, themes, and urgency automatically. Support conversations transform into actionable product feedback - minus the spreadsheet gymnastics.

Highlight-aware transcript edits 🎉

Typos happen. Now, when you correct a recording transcript, any highlights that overlap that segment update instantly. Your clipped quotes stay perfectly in sync with the source audio, ensuring every insight you share is accurate down to the last letter.